by Maxim Knepfle, CTO Tygron

At the NVIDIA GTC Conference I visited several presentations that touched on a very interesting subject: The need to see the GPU as a throughput machine and that you always need to have more workloads available to keep feeding this beast.

In this blog I describe how we were able to incorporate this concept and thereby reduce the total calculation time of multiple jobs up to 80%.

Why is this interesting for us?

At Tygron we have spent a lot of time optimizing the performance of a single job, e.g. a flooding or heat-stress simulation. However in the end it is not always possible the keep the GPU 100% busy.

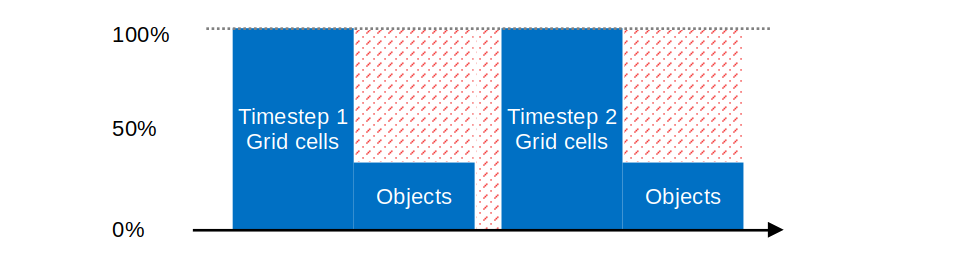

For example a GPU Cluster can do 40.000 calculations in parallel, which is perfect when you are calculating 10 million grid cells in a flooding simulation. But in the next phase this simulation is also processing the water objects (weirs, culverts, etc) and you might only have 10.000 objects causing throughput to drop to 25% usage. This becomes even worse when a cluster has multiple GPUs working on the same single job and not all of them have the exact same workload. They now have to wait (0% usage) on each other to finish before advancing to the next time step.

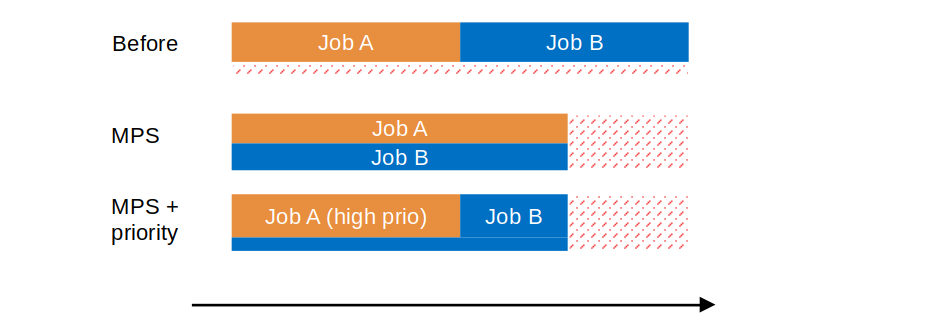

* Although it is certainly possible to further optimize single job-calculations (e.g. by breaking it up into smaller parts), why not also create multiple contexts and let another job use this unused capacity? This will not speed up single jobs but when doing two or more jobs simultaneously the total execution time is reduced because the throughput beast is better fed!

* This is of special importance for Tygron as we see a continuous increase in the amount of simulation jobs done on our platform, about 100.000 jobs where processed last year. This can lead to a situation where users sometimes have to wait for a long running job to finish before their own job can be scheduled. Especially during live-sessions or emergencies, where the Tygron platform is used to immediately calculate effects of changes to an area, this is not welcome. Therefore there is also a need to prioritize jobs and sometimes temporarily scale back the amount of GPU capacity for low priority jobs.

Updates

These two requirements resulted in the following architectural updates:

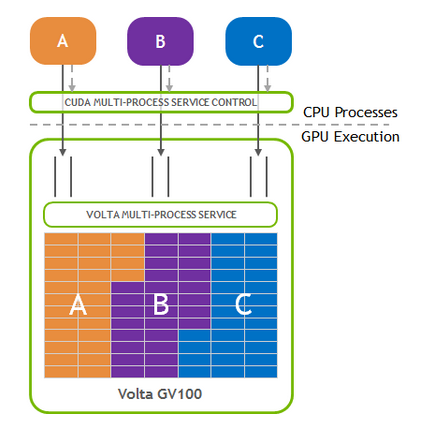

1 Multi Context: A Tygron GPU Cluster now has multiple contexts that can be used for simulation jobs. Each context has dynamically allocated memory based on the job size. This allocation is constantly synchronized with the central platform scheduler so two contexts can never ask for more memory then available on the GPUs.

2: Multi-Process Service: Using the NVIDIA Multi-Process Service (MPS) a hardware based GPU scheduler is also activated. This scheduler maps the two contexts into a single one that will then be optimally executed by the GPU’s processing cores. This also means no overhead due to context switching.

s

3 Priority: Tygron simulation jobs now also have a priority that is given to the GPU’s stream. The MPS uses this value to allocate resources to parallel running jobs. Long running jobs automatically have a lower prio and you can also adjust the default priority by adding a PRIORITY attribute to an overlay.

4 Small Jobs: When running small jobs on e.g. an 8 GPU cluster the overhead of communicating between GPUs is bigger than the advantage of having 8 devices. Therefore the amount of devices allocated for the job is automatically reduced to e.g. 2 out of 8. The other 6 can now also be used again for other small jobs.

Using these architectural updates we can see increases ranging from e.g. 30% reduction in total simulations time when executing two jobs. But also up to 80% when a lot of smaller jobs are executed (note: although Tygron can evaluate billion of grid cells, most jobs only have a couple of million).

Available in July

At the moment of writing the new architecture is working on the development server and will go into the testing phase. On July 5th this functionality will become available in the Tygron Platform (LTS) in version 2024.6.6.

The update is “inside” the platform, which means that you don’t have to change anything but will have even more high performance computing power available at your fingertip!

Start your 10-day free trial

Do you also want to start integrating, simulating and presenting your data and calculation models? That’s possible! With the free trial, you can experience this.