Today was NVIDIA’s “Kitchen keynote” for the GTC 2020. CEO Jensen Huang showed the latest development in AI, High Performance Computing and a new GPU Accelerator called the A100.

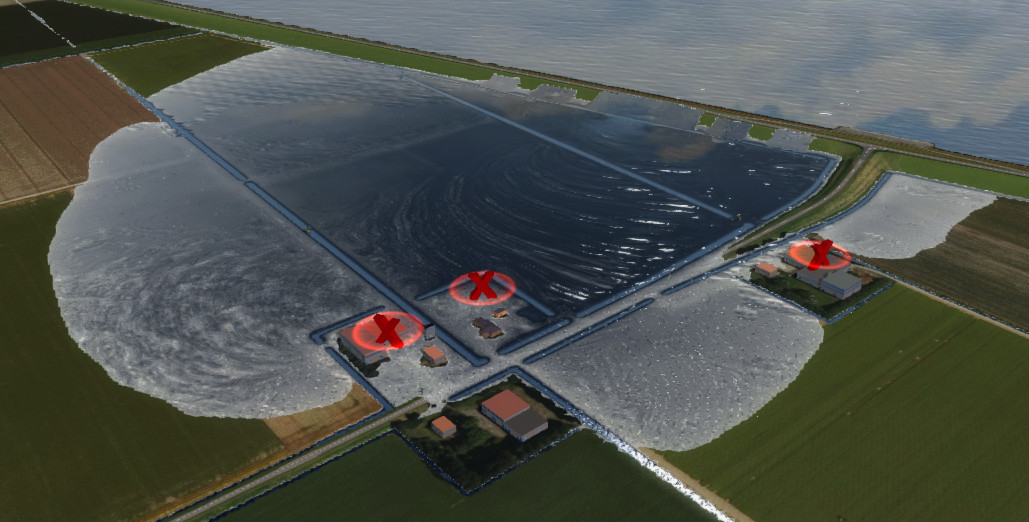

Flooding simulated and visualized by NVIDIA GPU hardware

Tygron has been using NVIDIA GPU’s to display our 3D environment and accelerate our heaviest calculations for many years. We are thus very exited when a new hardware is released, after seeing the keynote we can conclude the following:

1 The Specs: The A100 compared to the V100 used in many Tygron clusters has made another jump in performance specs.

- The amount of CUDA Cores is going up from 5120 to 6912.

- The NVLINK connecting it to other GPU’s has increased from 300GB/sec to 600GB/sec.

- Memory bandwidth from 900GB/sec to 1600GB/sec.

This means that when we add a new cluster to the Tygron Platform, without changing any code, the performance gets a significant boost. Resulting in shorter calculations for floodings, heat-stress, noise pollution, etc.

2 Tensor Cores: However far more interesting are the new Tensor Cores. These special cores allow you to do multiple “multiply and add” calculations (e.g. vector data) in a single call. When you use these efficiently you can increase performance by a factor 10!

Our current generation V100 already has Tensor Cores but they only accept 16 bit numbers which is not precise enough for e.g. flooding calculations and results in an unstable water balance. The A100 changes this by also allowing 32 and 64 bit calculations and can thus boost the “multiply and add” operations dramatically. These are very common throughout our code base.

Our current generation V100 already has Tensor Cores but they only accept 16 bit numbers which is not precise enough for e.g. flooding calculations and results in an unstable water balance. The A100 changes this by also allowing 32 and 64 bit calculations and can thus boost the “multiply and add” operations dramatically. These are very common throughout our code base.

3 Mellanox Highway: Another interesting development is NVIDIA’s purchase of Mellanox. Mellanox develops very fast network solutions used in many supercomputers, including the Tygron Platform. These supercomputers have multiple clusters (or nodes) connected by hi speed networks to allow for even bigger calculations than a single machine could handle. However the biggest problem in doing so is that you need to have a very fast highway between them, otherwise the GPU-units are just “waiting” all the time for new data to process.

Further integration of NVIDIA’s GPU accelerators, NVLINK and Mellanox networking will most likely result is an even faster highway. For example Mellanox Network cards have DPU‘s (Data Processing Unit) that can further facilitate communication between GPU’s on different machine using e.g. Remote Direct Memory Access.

We are looking forward trying out this latest GPU in practice and see how it can accelerate our platform!