by Maxim Knepfle, CTO Tygron

Artificial Intelligence (AI) is hot, and it seems that everyone needs to implement some sort of AI. But what does AI entail, what is the technology behind it and how does this relate to what we are doing at Tygron?

In this blog I’d like to give some insights of our journey into AI which dates back to the very beginning in 2005. At that time I was still following courses on AI and visualizations techniques at the Delft University, faculty of Computer Sciences. In September Tygron was founded and we started developing, what is now the Tygron Platform, on three related topics:

1: We started with 3D visualizations of cities and urban development. Pushed by the gaming industry this was a period of rapid development for the Graphical Processing Unit (GPU). With new versions of OpenGL coming out with for example hardware instancing that allowed Tygron to visualize thousands of trees.

2: When rendering all these millions of screen pixels we also thought why not use this GPU to run simulations based on grid cells instead of pixels? This resulted in 2008 in our first simulations on a GPU using CUDA 2.0.

3: But the ultimate goal back then was to also let AI calculate the optimal design given a set of indicators provided by the users. This resulted in 2010 in a optimization-model that could automatically place trees at different locations in a neighborhood in Delft. The optimal location was then determined using multiple weighted indicators (e.g. amount of green, financial costs, land ownership and heat stress). The model continued to operate until an optimal solution was identified.

The Delft model proposed intriguing tree placement solutions that had not been considered by humans during the urban planning phase. Nevertheless, these indicators were kept relatively straightforward to minimize calculation time. Several years later, in 2014, a more sophisticated experiment was conducted by integrating GOAL logic agents, developed by Delft University, with the Tygron Platform. These agents could do basic reasoning on beliefs and goals from different Stakeholder perspectives.

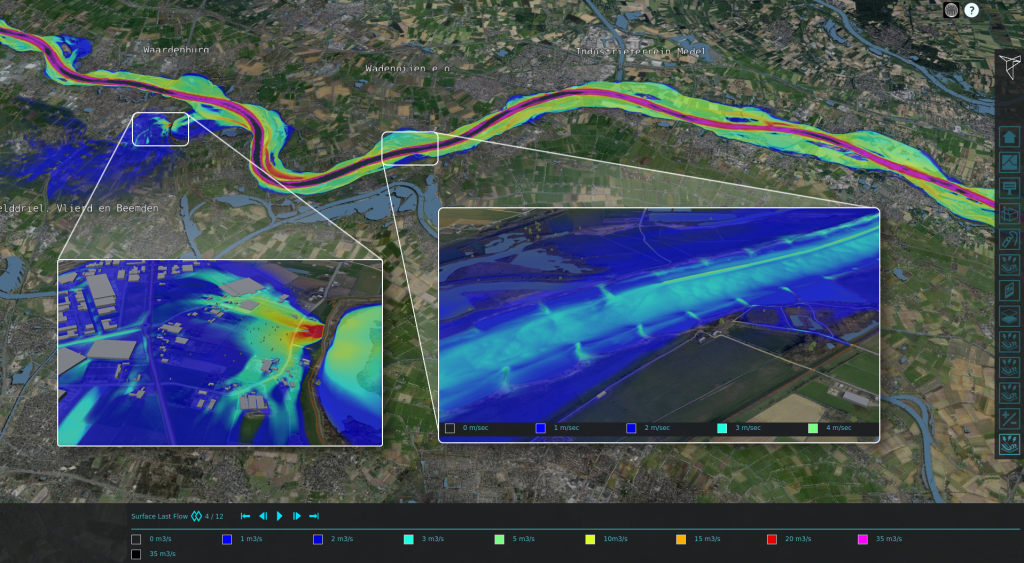

As time passed, GPU hardware advanced, and newer CUDA software versions incorporated increasingly more features. This allowed Tygron to run the first multi GPU based water-simulation in 2016 at the the “inundatie? rekenmaar!” event and even the entire Waal river with 25 billion grid cells in 2023. But did you know that these same GPUs are utilized for running neural networks in all prominent AI models, such as ChatGPT? The past few years have also seen significant increase in CUDA features in exactly this area. That’s why we are once more testing several AI variations to determine if they could enhance the platform.

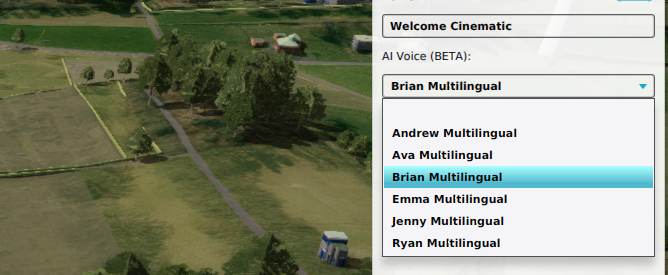

– Audio: A technique that has already evolved over the past years is text-to-speech. Using cognitive services from Azure we can connect texts to human speech converters. Last year this allowed us to create 60 how-to movies in a very short time period. This model was later on also used as an voice assistant in our Free-Trials and with the upcoming release (2024.6.6) it will be available in the Cinematics (fly-trough) option to create your own voice-overs. Tygron users will have the option to select several voices (male, female) and languages. Personally I was very impressed by the quality of the Dutch language in this model.

– Vision: The second technique we are working on is using trained models to detect and classify Tygron Overlay data. Tygron simulations can become very large (billions of grid cells) and this technique could help improve the data input quality of the model. An interesting use-case would be to detect (small) waterways using satellite imagery collected from e.g. a WMS Overlays and then compare these using a Combo Overlay to the waterways found in public sources like the BGT or Top10NL.

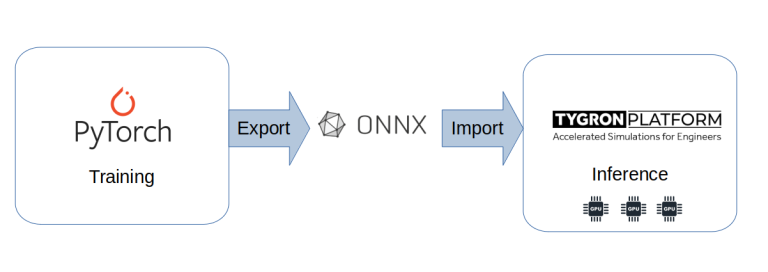

This is done by training a model in an industry standard program like PyTorch and export it using the Open Neural Network Exchange (ONNX) format to the Tygron Platform, where it is integrated into our existing GPU infrastructure. Subsequently, the user would get a new AI Inference Overlay capable of interfacing with all other Tygron datasets and conducting inference on them. This method is presently under development and is anticipated to be included in our upcoming Preview release later this year.

– Language: These large language models (LLM) are currently a very hot topic with the advent of generative models like ChatGPT and we are researching whether this could help Tygron users. For example by creating a co-pilot that can interpret questions about how the platform works or with the creation of TQL statements. A query such as “what is the current water level on the main road” would be translated into an existing Tygron query statement that can offer the response and navigate to the specific location in the 3D visualization. The LLM would need to be purposely trained on our WIKI and on the TQL syntax. While previous use cases have made our development roadmap, we have not yet finalized a decision on this particular one. If you believe this would be a valuable addition, please inform us.

– Longer down the roadmap we are still interested in continuing our research into the optimal design (2010). With the ability to run extensive simulations (HPC) and AI neural networks on our GPUs, this vision may one day become a reality. During our visit of the NVIDIA GTC event we have also seen researchers working on hybrid HPC & AI models. In this scenario, you might, for instance, execute a simulation timestep utilizing a physics-based model followed by one or more timesteps employing AI techniques. Despite the promising nature of this approach, it has not yet yielded substantial speed enhancements compared to only GPU physics-based.

In conclusion, I believe GPUs are highly versatile, capable of producing intricately detailed 3D visualizations, executing physics-based simulation models (HPC) and with the assistance of AI, interpreting vast datasets to enhance quality. By integrating these three technologies in a mutually supportive manner, we anticipate propelling our Tygron roadmap forward in the years ahead!

Start your 10-day free trial

Do you also want to start integrating, simulating and presenting your data and calculation models? That’s possible! With the free trial, you can experience this.